Nvidia Introduces Magic 3D, Bringing 2D to Life

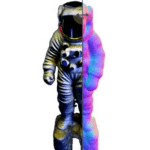

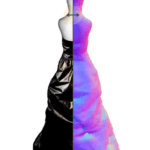

Nvidia introduces Magic3D, which enables users to generate 3D assets without having to possess 3D modeling expertise.

AI continues to open up opportunities for faster and easier ways to create 3D content, without the need of learning any 3D modeling software. Magic Fabric has earlier covered the London-based startup Kaeadim3D in this interview, showing a similar, yet more refined service turning 2D images into 3D assets, used by game companies. Nvidia’s Magic3D similarly offers users to render high-quality 3D models from any 2D image input or text input. Announced last week, by Nvidia researchers, it suggests a future where anyone without knowledge in 3D software can create, and even modify 3D assets.

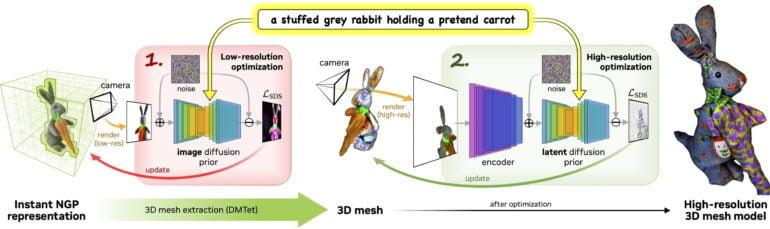

Like Dreamfusion, Nvidia Magic3D relies at its core on a generative image model that uses text to generate images from different angles, which in turn serve as input for 3D generation. Nvidia’s research team uses eDiffi, an internal image model, and Google relies on Imagen. Given input images for a subject instance, it can also fine-tune the diffusion models with DreamBooth and optimize the 3D models with the given prompts. Nvidia hopes as the tech evolves it can be used for asset creation in video games and VR development.

Google announced DreamFusion, a text to 3D model in September this year.

Also read about Open AI:s Shape-E.